Overcoming Challenges to Generative AI Success

July 17, 2024

Introduction

Generative AI is characterized by its capacity to generate content, models, or solutions autonomously and holds profound promise across diverse sectors. Gen AI is transforming the global economy, especially industries like medicine, entertainment, and finance, by creating realistic visuals and text and building novel products.

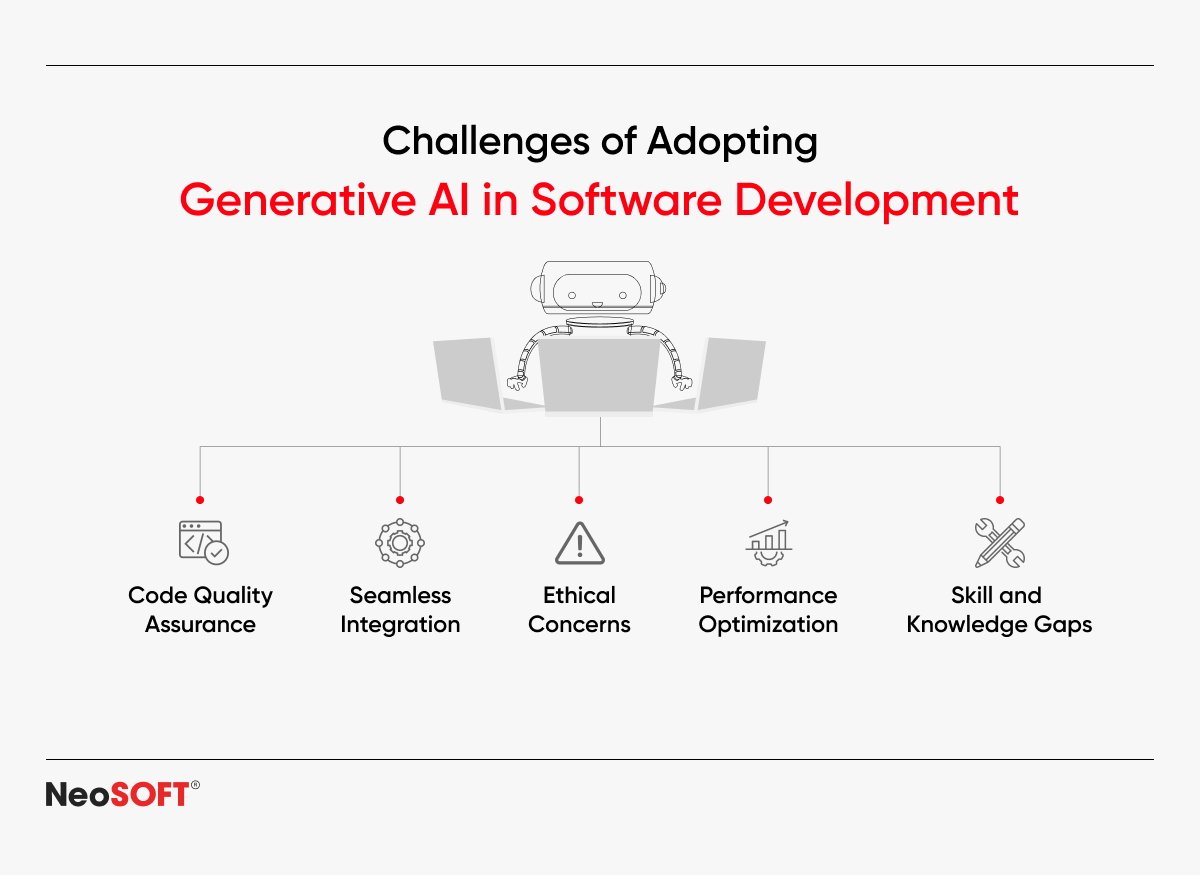

Understanding and overcoming the hurdles that accompany a Gen AI system is critical to realizing its full potential and success. Addressing technical complexities, data requirements, ethical considerations, resource quantities, and integration challenges are critical stages for enterprises to capitalize fully on Gen AI breakthroughs.

This blog is for software developers, data scientists, AI professionals and enthusiasts, in addition to leaders and decision-makers from many sectors. It provides actionable insights, tactics, and practical solutions for efficiently and responsibly overcoming potential obstacles in generative AI projects.

Challenges in Gen AI Adoption: Data Quality and Quantity

Data Quality and Quantity

High-quality data forms the bedrock of effective AI training, influencing the accuracy and reliability of generated outputs. High-quality training data ensures that generative AI models understand meaningful patterns in input data and make sound conclusions about generated content, which is critical for a myriad of use cases ranging from healthcare diagnostics to finance forecasting.

Acquiring huge, diversified datasets can be complex due to concerns about privacy, silos of existing data, and the cost of data gathering. Curating these datasets entails cleaning, annotating, and ensuring that they accurately reflect the real-world circumstances that AI applications will encounter.

Solutions:

- Data augmentation – Increases dataset diversity by creating new training examples using techniques such as rotation, cropping, or adding noise. This improves the dataset without needing more data to be collected.

- Synthetic data generation – Produces synthetic data that closely resembles real-world circumstances, allowing AI systems to learn from situations that would be rare or difficult to collect in actual data. This strategy is useful in areas such as autonomous driving and robotics.

- Robust data pipelines – Creating effective pipelines guarantees that data flows smoothly from collection to pre-processing and model training. Automation and surveillance in these pipelines contribute to data consistency and quality across time.

Computational Power and Resources

Training generative AI models, particularly those based on deep learning architectures, need substantial computational resources. This includes robust GPUs and customized hardware accelerators that can handle the heavy computations required to process massive datasets and intricate algorithms. Deployment also requires significant resources to ensure that generative and deep learning models perform well in real-time applications.

Many companies, particularly small enterprises and start-ups, may find using high-performance computing and deep and machine learning resources prohibitively expensive. Investing in the necessary equipment, maintaining it, and covering the accompanying bills can be considerable. Furthermore, access to these resources may be limited by geographical and infrastructure constraints, leading to discrepancies in AI creation and implementation capabilities.

Solutions:

- Cloud computing – Cloud platforms such as AWS, Google Cloud, and Azure offer scalable and flexible computing power on demand. Organizations can access high-performance computing capacity without significantly investing in hardware, and pay-as-you-go approaches allow for more effective cost management.

- Distributed computing – Using distributed computing frameworks like Apache Spark or Hadoop, computational workloads can be distributed across numerous workstations. This strategy can speed up training timeframes and make better use of existing resources, facilitating the processing of large-scale data and sophisticated models.

- Efficient algorithm design – Creating and implementing more efficient algorithms helps alleviate computing stress. Model pruning, quantization, and knowledge distillation are all techniques that help build lighter models that demand less processing power while still performing effectively. Studies into refining neural network architectures and learning methods also help reduce computing load.

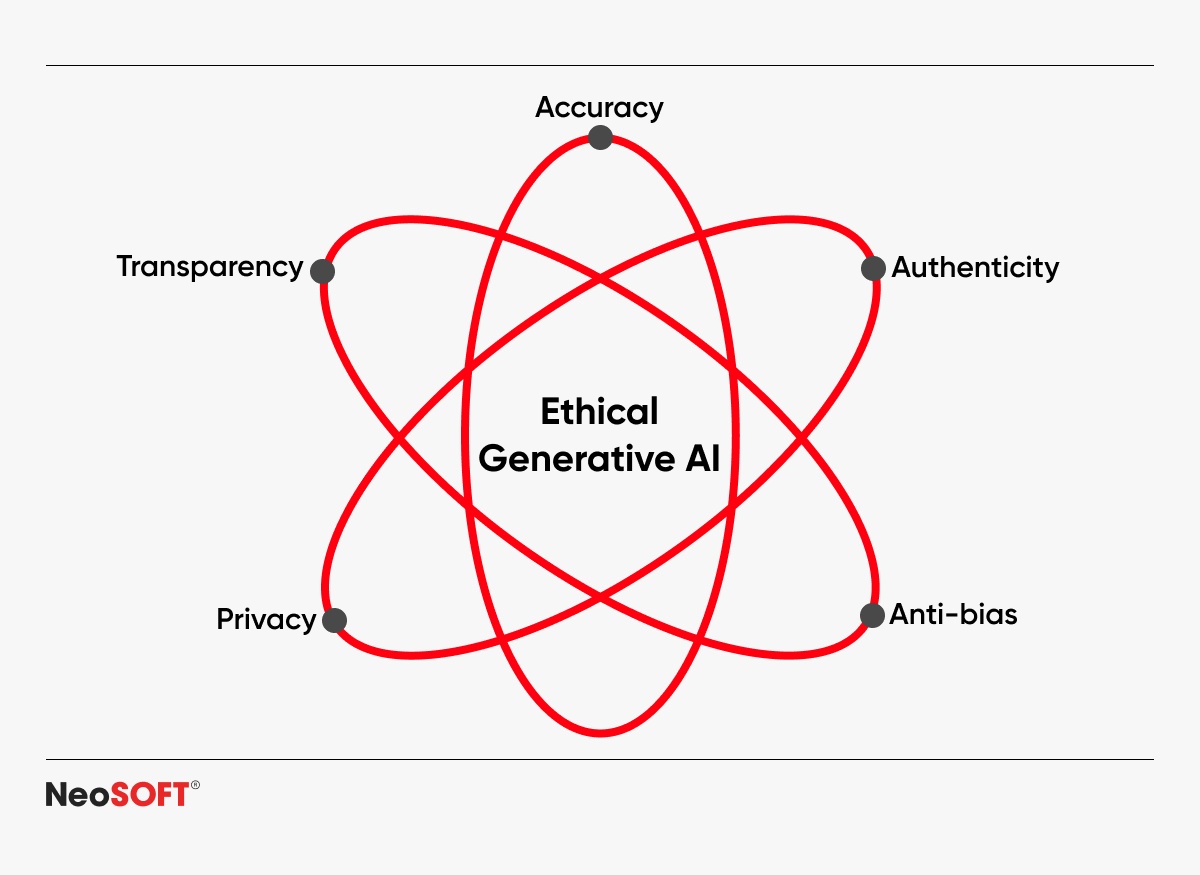

Ethical and Bias Concerns

Many generative AI models may unwittingly perpetuate and amplify biases in training data, resulting in unjust or prejudicial conclusions. These concerns are especially important in applications involving critical judgments, such as recruiting, finance, and law enforcement. Moral dilemmas occur when AI-generated content, data or decisions affect people’s rights, opportunities, and privacy.

Ensuring justice and fairness in AI algorithms is critical for avoiding reinforcing social disparities. Transparency is required to understand how generative AI makes decisions, enabling stakeholders to trust the outputs. Accountability measures are required to hold developers and organizations liable for the consequences of their AI capabilities and ensure that they follow ethical norms and regulations.

Solutions:

-

Bias detection and mitigation techniques

- Pre-processing – Techniques such as resampling, reweighting, and artificial data synthesis can help create balanced datasets with minimal bias prior to training.

- In-processing – Adversarial debiasing algorithms and fairness restrictions can be utilized during the training to eliminate biases in model learning.

- Post-processing – Methods such as equalized odds post-processing modify the training model outputs to make sure that all groups are treated equally.

- Ethical guidelines – Developing and following comprehensive ethical criteria for AI development and deployment is critical. These rules should include fairness, transparency, responsibility, and privacy stipulations. Organizations can form ethics committees to evaluate AI programs and ensure that they follow ethical standards.

- Diverse data representation – It is vital to ensure that the datasets utilized to train AI models are diverse, representing a wide variety of demographic groups. This lowers the risk of biases and increases the generalizability of AI algorithms. Collaborating with various communities and decision-makers can provide helpful perspectives and support the early detection of potential biases and ethical concerns in the development process.

Compliance and Regulatory Frameworks

Data protection legislation, such as India’s new Digital Personal Data Protection (DPDP) Act of 2023 and the EU’s General Data Protection Regulation (GDPR) of 2018, place strict restrictions on how sensitive and personal data is collected, processed, and used.

To reduce legal risks, protect data, and maintain the highest possible moral standards in the creation and application of generative AI models, compliance with these regulations is of great importance. AI-specific rules are being developed to address concerns about data transparency, responsibility, as well as bias reduction in generative AI models and ethical use of generative AI capabilities.

Solutions:

-

Proactive compliance strategies

- Risk assessments – Conducting extensive risk assessments to identify potential legal and regulatory obligations required to deploy AI models.

- Compliance frameworks – Creating robust compliance frameworks that incorporate ethical, legal, and technical concerns into AI development processes.

- Regular audits – Conducting audits regularly to guarantee continuous compliance with industry requirements and standards.

- Adaptive policies – Adopting agile policy creation methods that can respond swiftly to changes in regulatory frameworks and updates in developing AI models.

Integration with Existing Systems

Integrating AI technologies into old and often obsolete legacy systems can prove challenging. These systems may lack the adaptability and compatibility required to effectively incorporate advanced AI technology. This can result in data silos, inconsistent data formats, and inefficient workflows. A team acclimated to legacy systems may also be resistant to new technologies, complicating the integration process further.

Maintaining seamless interoperability between generative AI applications and pre-existing systems is vital in minimizing interruptions. This demands the assurance that the newly developed generative AI tools can properly communicate with legacy systems while reducing downtime and performance difficulties. Disruptions can cause operational inefficiencies as well as production and financial losses.

Solutions:

- Modular architectures – Designing generative AI models with a modular framework enables progressive integration. Each module can operate separately and integrate with certain specific components of the existing legacy system, lowering the risk of wider disruption. Modular architectures allow more accurate troubleshooting and maintenance since errors can be isolated inside specific components.

- API integrations – APIs, or application programming interfaces, connect gen AI tools and legacy systems. APIs serve as intermediaries, translating data and queries between software components to ensure compatibility. APIs can be tailored to meet specific integration requirements, making it more straightforward to connect different systems and automate procedures.

- Phased implementation – Deploying AI solutions in stages rather than in just one, large-scale rollout reduces risks and facilitates gradual adoption. Begin with pilot initiatives or particular divisions before extending throughout the organization. Phased deployment allows for collecting feedback, early identification of issues, and implementing necessary adjustments, resulting in a smoother transition and increased employee acceptance.

Future Trends in Generative Artificial Intelligence

Generative AI is still evolving, thanks to the human intelligence behind rapid technological and methodological advances.

Large language models like GPT-4, Gemini, and BERT are becoming more factually accurate and contextually aware, allowing for more nuanced and complex natural language processing. These gen AI models will enable increasingly sophisticated and organic interactions in customer support technologies, such as chatbots and virtual assistants.

Multimodal artificial intelligence combines text, images, audio, and video in a single generative AI model, resulting in more complete applications and richer, more interactive user experiences. This integration enhances image generation and content creation in virtual and augmented reality.

Federated learning improves data privacy by training AI models on multiple decentralized devices, allowing sensitive data to remain local while contributing to model development. This has potential to be immensely valuable in industries like healthcare and finance, where data preservation and security are critical.

GANs continue to grow, learning to create realistic images and content that can be used in media, entertainment, and fashion, as well as introducing new AI research avenues and project opportunities for creative businesses. These innovations can completely alter the art, architecture, digital advertising and visual effects industries.

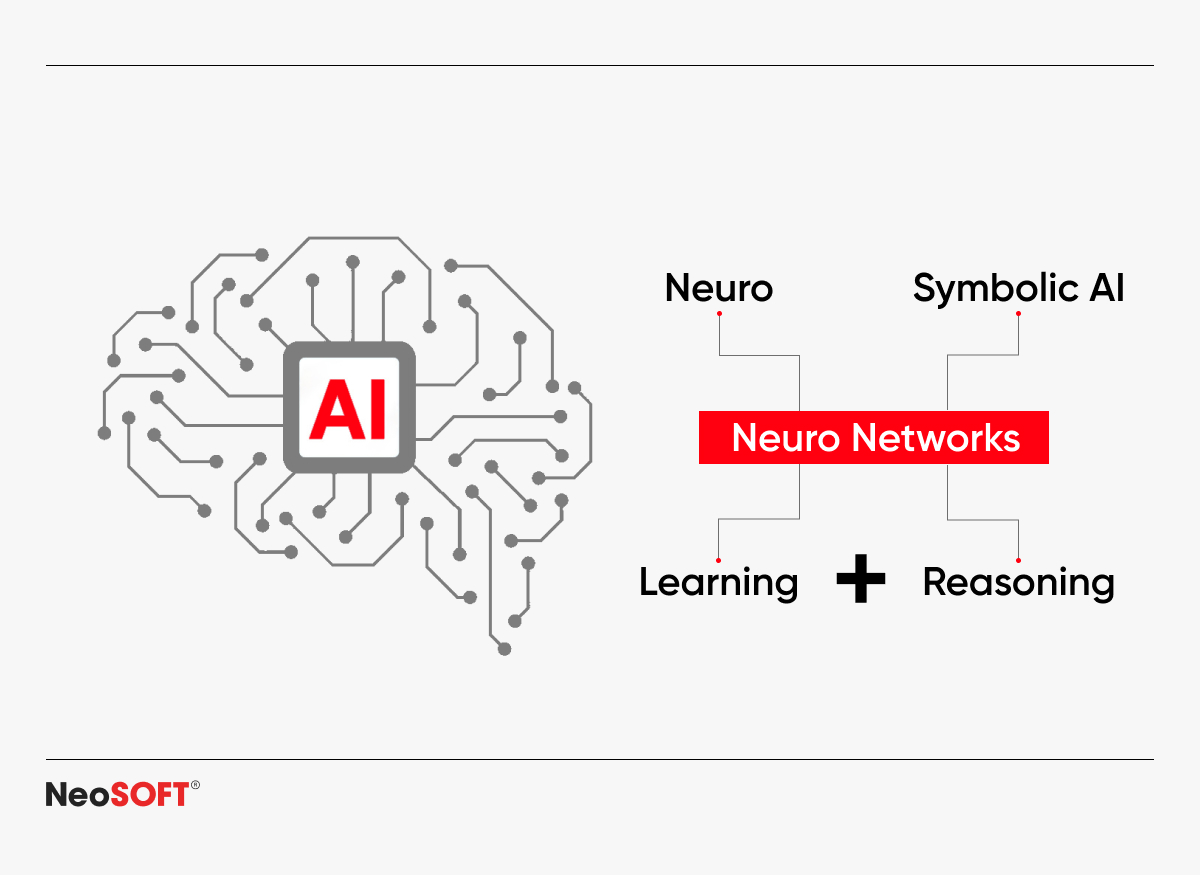

Neuro-symbolic AI combines recurrent neural networks and symbolic thinking to enhance interpretation and decision-making. This makes AI systems more reliable and transparent in challenging situations, enhancing their ability to tackle complex challenges in domains such as legal thinking and scientific research.

Conclusion

Overcoming the hurdles connected with implementing generative AI is important to realize its proven revolutionary potential. Addressing concerns about data quality, computing resources, ethical implications, regulatory compliance, and legacy system integration can result in considerable progress in a variety of industries. As these barriers are overcome, the benefits of using generative AI and machine learning models will become more widely accessible, accelerating innovation and efficiency across the board.

Our highly trained team of AI experts is ready to help you navigate generative AI issues and achieve AI success. Reach out to us today at [email protected].

Recommended